- #Triggerdagrunoperator airflow 2.0 example how to#

- #Triggerdagrunoperator airflow 2.0 example update#

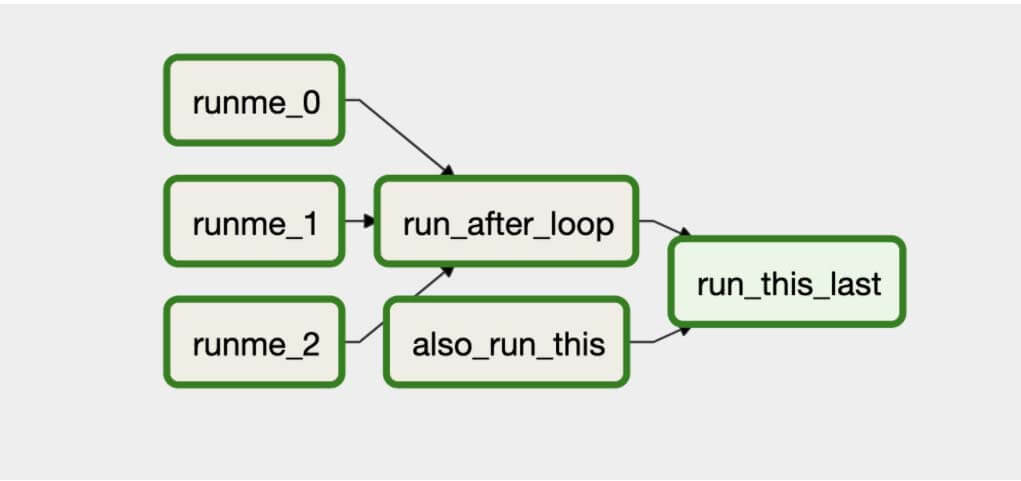

To your tasks while executing that DAG run. The payload has to be a picklable object that will be made available The run_id should be a unique identifier for that DAG run, and Payload attribute that you can modify in your function. Object obj for your callable to fill and return if you wantĪ DagRun created. Python_callable ( python callable) – a reference to a python function that will beĬalled while passing it the context object and a placeholder But, if you carefully look at the red arrows, there is a major change. Trigger_dag_id ( str) – the dag_id to trigger (templated) Sure DAG Dependencies (trigger) The example above looks very similar to the previous one. Triggers a DAG run for a specified dag_id Parameters

TriggerDagRunOperator ( trigger_dag_id, python_callable = None, execution_date = None, * args, ** kwargs ) ¶ DagRunOrder ( run_id = None, payload = None ) ¶īases: object class _operator. These methods include: Using the TriggerDagRunOperator, as highlighted in trigger-dagrun-dag.py. There are multiple ways of achieving cross-DAG dependencies in Airflow, which are each represented by an example DAG in this repo.

#Triggerdagrunoperator airflow 2.0 example how to#

If you need to set dependencies in this manner, you can use Airflow’s chain function:įrom _operator import DummyOperatorįrom Contents ¶ class _operator. A guide with an in-depth explanation of how to implement cross-DAG dependencies can be found here. What if you want to set multiple parallel cross-dependencies? Unfortunately, Airflow can’t parse dependencies between two lists (e.g. There are two ways of defining dependencies.Start_task > trigger_dependent_dag > end_task Trigger_dependent_dag = TriggerDagRunOperator( You can create the variable list using the Airflow UI All Success (default) Definition: all parents have succeeded cook with. You can use JSON values to call multiple variables in an efficient manner, using the Jinja Template Engine. In this section, we shall study 10 different branching schemes in Apache Airflow 2.0. Best practice: try to fetch the variables within the tasks, to avoid making useless connections every 30 seconds.Variables can be viewed using the Airflow UI under Variables.2nd DAG (exampletriggertargetdag) which will be triggered by the TriggerDagRunOperator in the 1st DAG. 1st DAG (exampletriggercontrollerdag) holds a TriggerDagRunOperator, which will trigger the 2nd DAG. uranusjr assigned o-nikolas on Remove the execution time from the extra link and just link to the trigger ed dag id alone. Example usage of the TriggerDagRunOperator.

#Triggerdagrunoperator airflow 2.0 example update#

For information on configuring Fernet, look at Fernet. on The filter timestamp in the url from the triggering dag does not work at all When you manually update the filter, it is as ljades described, where you do not see the latest dag run. It guarantees that without the encryption password, content cannot be manipulated or read without the key. Airflow uses Fernet to encrypt variables stored in the metastore database. Variables can be listed, created, updated and deleted from the UI. Variables are a generic way to store and retrieve arbitrary content or settings as a simple key value store within Airflow.You should know the most common operators as well as the specificities of others allowing to define DAG dependencies, choose different branches, wait for events and so on” You should be comfortable for recommending settings and design choices for data pipelines according to different use cases. What are the pros and cons of each one as well as their limitations. “You have to show your capabilities of understanding the different features that Airflow brings to create DAGs. To study for this exam I watched the official Astronomer preparation course, I highly recommend it.Īccording to Astronomer, this exam will test the following: a weekly DAG may have tasks that depend on other tasks on a daily DAG. For example: Two DAGs may have different schedules. However, it is sometimes not practical to put all related tasks on the same DAG. The exam includes scenarios (both text and images of Python code) where you need to determine what the output will be, if any at all. Airflow also offers better visual representation of dependencies for tasks on the same DAG. The study guide below covers everything you need to know for it. The exam consists of 75 questions, and you have 60 minutes to write it. Apache Airflow is the leading orchestrator for authoring, scheduling, and monitoring data pipelines. This study guide covers the Astronomer Certification DAG Authoring for Apache Airflow.

0 kommentar(er)

0 kommentar(er)